The New Economics of AI:

Are You Overpaying for Performance?

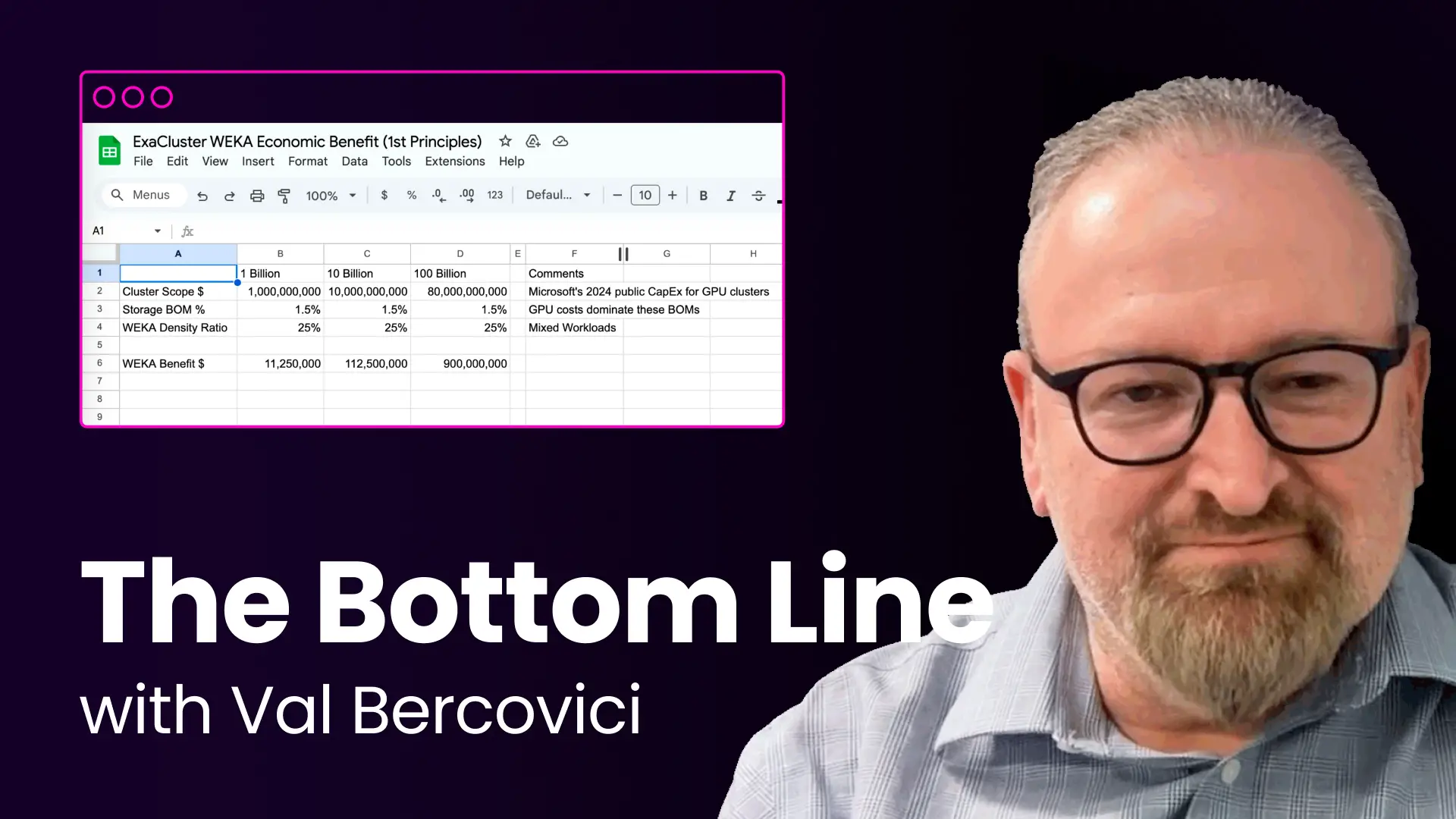

The economics of token processing are emerging as a decisive factor in infrastructure selection. Affordable solutions that can reduce costs on token generation will directly impact scalability and adoption.

Optimize for low-cost token generation

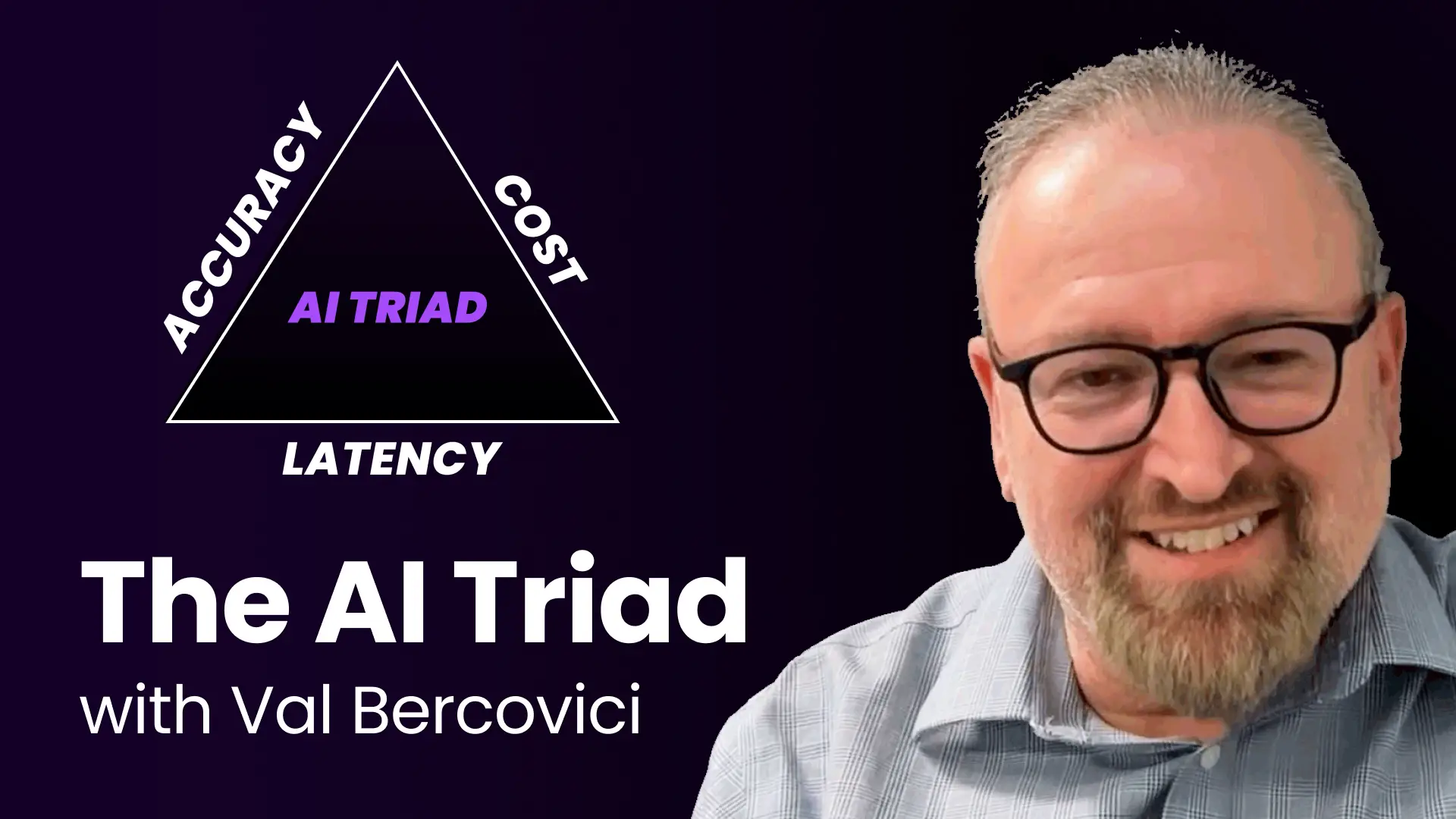

AI workflows are constrained by three forces: cost, latency, and accuracy. Historically, improving one means sacrificing another, but infrastructure efficiencies – like reducing your memory dependency while ensuring accuracy – can break this cycle.

Reduce latency for AI inference

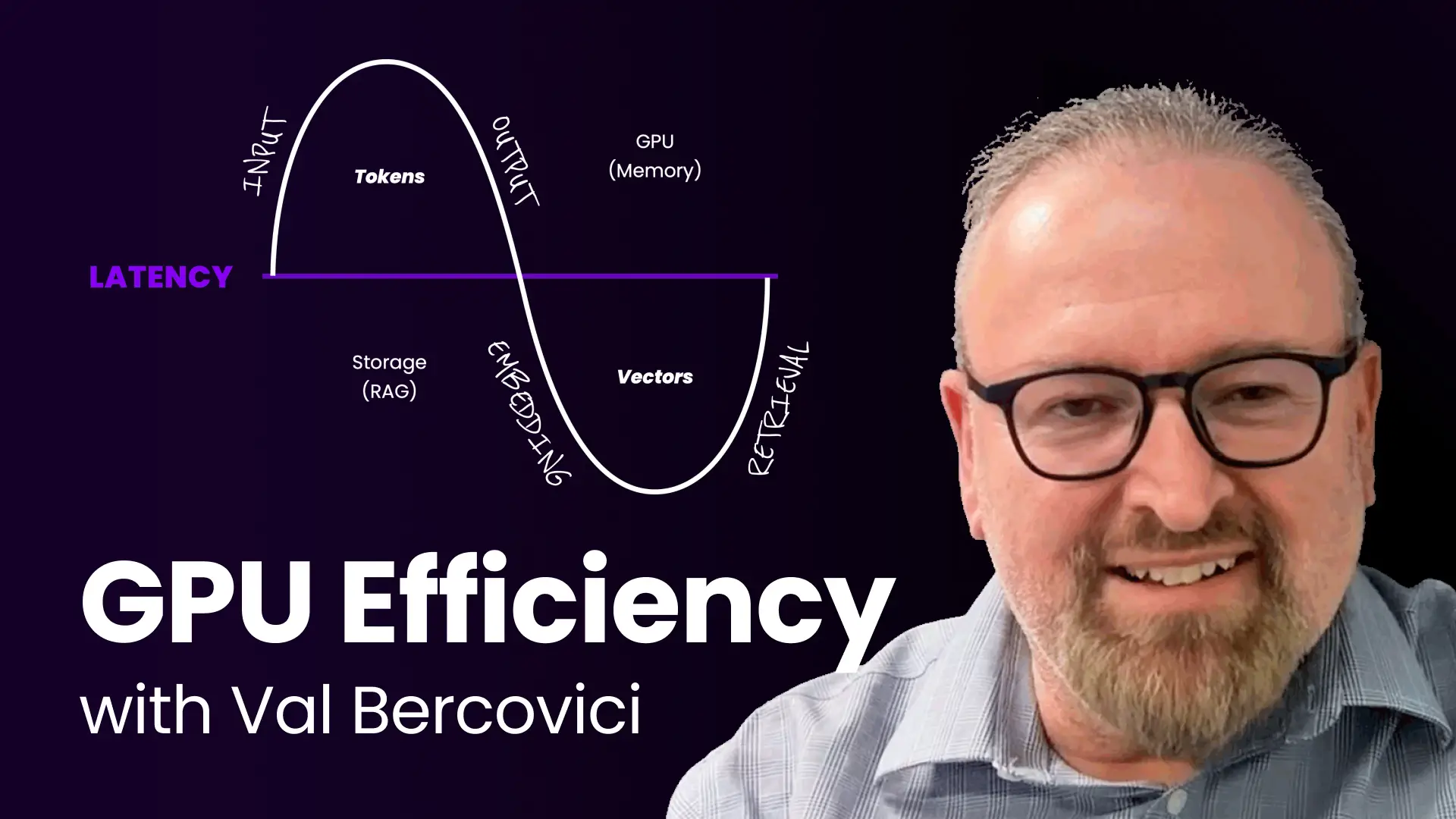

Every millisecond saved in AI token inference translates to efficiency gains and reduced infrastructure overhead. WEKA GPU-optimized architecture enables token processing at microsecond latencies, removing traditional bottlenecks and enabling high-speed data streaming.

WEKA’s Augmented Memory Grid: Enabling the Token Warehouse for AI Inference

WEKA Augmented Memory Grid™ extends GPU memory into petabytes, eliminating the bottlenecks of traditional DRAM and delivering ultra-low latency token recall. AI models can instantly access and reuse precomputed token embeddings, dramatically improving inference speed and efficiency. WEKA Augmented Memory Grid is a revolutionary approach to AI inference that enables scalable, high-performance token storage and retrieval across massive workloads.

Interested in learning more?