AI: A Complete Guide in Simple Terms

What is AI?

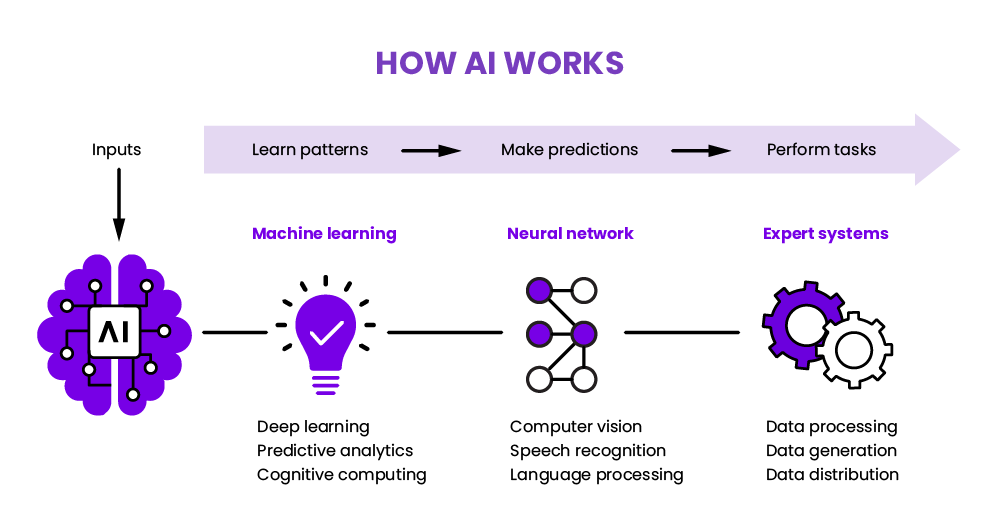

The term artificial intelligence (AI) refers to a field of computer science that designs machines advanced enough to perform tasks that in the past required human intelligence to achieve. AI scientists develop algorithms and systems that acquire, process, and analyze data, recognize patterns, and make decisions.

The main goal of AI is to replicate or simulate the cognitive abilities of humans, such as communication, learning, perception, problem-solving, and reasoning. It enfolds various subfields, including computer vision, expert systems, machine learning, natural language processing, and robotics.

Understanding AI Technology: What is AI Technology in Historical Context?

To understand AI, it is important to see how the field developed. The technology itself was directly inspired by the human brain and its function, and each series of advances was made possible by new understandings of how the human brain works as well as technological advances.

AI development began in the mid-20th century when researchers invented electronic computers. Researchers like Alan Turing and John von Neumann proposed the idea of machines that could simulate human thought processes, and Turing proposed the “Turing test” as a means for testing a machine’s ability to exhibit intelligent, human-like behavior.

The Dartmouth Conference in 1956 is often seen as the formal starting point for the birth of AI as a formal research field. There, researchers in the field coined the term “artificial intelligence” and set the goal of creating machines that could “simulate every aspect of human intelligence.”

In the 1950s-1960s, researchers focused on developing early AI programs that could solve problems symbolically using logical reasoning. By the 1970s, AI research moved towards knowledge-based systems, which represented knowledge in the form of rules and used inference engines to reason and solve problems.

Then, in the 1980s and 1990s machine learning and neural networks brought new approaches to AI. Machine learning algorithms, such as decision trees and neural networks, allowed systems to learn patterns and make predictions based on data. This period also saw the rise of expert systems and the development of natural language processing (NLP) techniques.

By the turn of the century and through the 2010s, AI and big data as well as increased computational power led to more advanced deep learning. Deep neural networks with multiple layers became capable of automatically learning hierarchical representations, enabling breakthroughs in computer vision, speech recognition, and much more sophisticated NLP systems.

More recently, breakthroughs in reinforcement learning and generative AI models are being applied in various domains, including self-driving cars, virtual assistants, healthcare diagnostics, finance, and more. This is a tremendous piece of why AI is important: it is already here, all around us.

How Does AI Work? AI Technology Explained

Deep learning in AI is a critical part of how AI works, and this process is inspired by the structure and function of the human brain. AI technology processes and analyzes data using algorithms and computational models. These tools allow the system to recognize patterns, and make decisions or predictions.

Deep learning AI technology involves the use of artificial neural networks (ANNs) with multiple networked layers of artificial neurons or nodes called “units.” Each unit receives inputs, assigns them weight, performs calculations, and passes the results to the next layer. Deep learning models extract features or hierarchical representations from the data automatically, enabling them to capture complex patterns and relationships.

Model architecture design involves defining the number and type of layers, the number of units in each layer, and the connections between them. Common architectures include convolutional neural networks (CNNs) which are mainly used for image data, feedforward neural networks which are mainly used for supervised learning, and recurrent neural networks (RNNs) which are mainly used for sequential data.

During the training phase, the deep learning model learns over time how to adapt the biases and weights of the labeled data’s neural network. Typically the model deploys optimization algorithms, which iteratively update the model’s parameters, to minimize the difference between the actual and predicted outputs.

After the deep learning model is trained, it can make predictions on new, unseen data. The trained model takes input data, performs forward propagation, and generates predictions or class probabilities based on the learned patterns.

AI Development and AI Models

An AI model is a computational representation of an AI system. These models are designed to learn patterns, make predictions, or perform specific tasks based on the input data they receive. Users can create x using various techniques and algorithms, depending on the problems they are designed to solve.

Here are a few common types of AI models:

Statistical models. Statistical models analyze data and make predictions using mathematical models and statistical techniques. They are often based on probabilistic methods and can handle structured or tabular data. Examples include linear regression, logistic regression, decision trees, and support vector machines.

Machine learning models (MLMs). MLMs automatically learn patterns and relationships from data and can adapt and improve their performance with experience. Popular machine learning algorithms include k-nearest neighbors, naive Bayes, random forests, and gradient boosting algorithms like XGBoost and LightGBM.

Deep learning models (DLMs). DLMs are a subset of machine learning models that are based on artificial neural networks with multiple layers. They can automatically learn hierarchical representations of data and excel at tasks such as image and speech recognition, NLP, and sequence generation. CNNs and RNNs are commonly-used DLMs.

Reinforcement learning models (RLMs). RLMs learn by interacting with an environment and receiving feedback in the form of rewards or penalties. These models aim to find the optimal actions or policies that maximize cumulative rewards. Reinforcement learning has been successful in applications like game playing and robotics.

Generative models. These models generate new data similar to the training data distribution. They can generate realistic images, text, or audio samples. Examples include Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs).

What are AI pipelines?

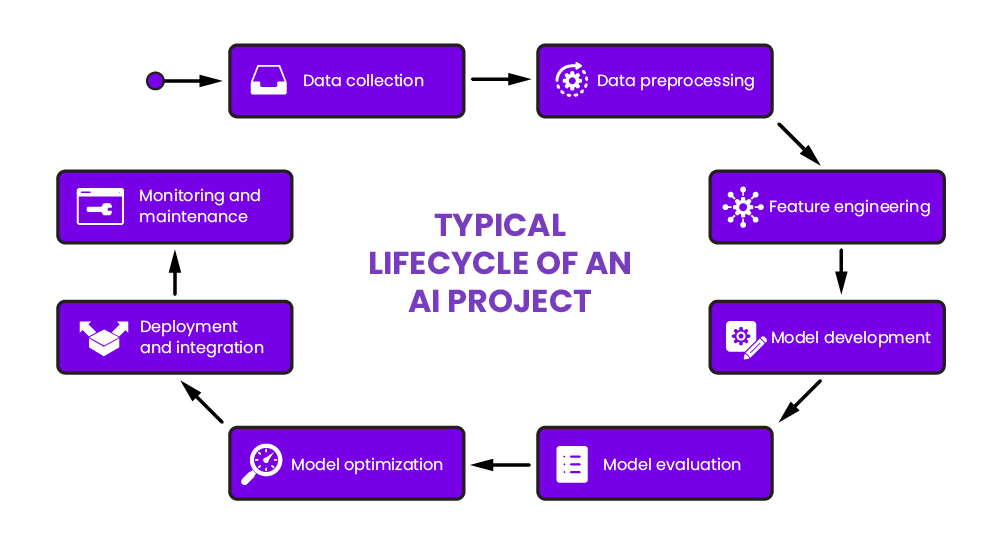

An AI pipeline or AI data pipeline refers to the sequence of steps or stages involved in developing and deploying AI systems. An AI pipeline encompasses the entire lifecycle of an AI project, from data collection and preprocessing to model training, evaluation, and deployment. It provides a systematic framework for managing and organizing the various tasks and components involved in AI development.

Here are the typical stages or components found in an AI pipeline:

Data collection. The pipeline begins with gathering relevant data to train and evaluate the AI model. It can come from various sources, such as databases, APIs, sensors, or human-generated annotations.

Data preprocessing. Data often needs to be preprocessed, cleaned, and normalized to prepare it for training before it is input into the AI model.

Feature engineering. Feature engineering involves extracting, selecting, or creating relevant data features to help the AI model learn patterns and make more accurate predictions. This step may involve domain expertise, statistical analysis, or automated feature selection techniques.

Model development. The AI model architecture and algorithm are selected in this stage based on the specific problem. Development can involve choosing from statistical models, machine learning algorithms, or deep learning architectures. The model is then trained using the prepared data.

Model evaluation. The performance and generalization capabilities of the trained model are evaluated according to various metrics depending on the task, such as accuracy, precision, recall, F1 score, or area under the curve (AUC). Cross-validation or hold-out validation sets are often used to estimate the model’s performance.

Model optimization. If the model does not meet the desired performance criteria, it can be optimized with hyperparameter tuning, model architecture adjustment, or regularization techniques to improve its performance.

Deployment and integration. Once it has reached satisfactory capabilities, including considerations of scalability, reliability, security, and monitoring, the model can be deployed into the production environment. Deployment involves integrating the model into a larger system or application, often using APIs or microservices.

Monitoring and maintenance. After deployment, the AI system must be monitored to ensure continued performance and reliability. This includes monitoring data drift, model performance degradation, and handling updates or retraining as new data becomes available.

AI pipelines provide a structured approach to AI development, allowing teams to collaborate, track progress, and ensure the quality and efficiency of the AI systems they create. They help streamline the workflow and facilitate the development of robust and reliable AI solutions.

AI Pipeline Architecture

AI pipeline architecture refers to the design and structure of the pipeline that supports the development, deployment, and management of AI systems. The specific architecture of an AI pipeline can vary depending on the organization, project requirements, and technologies used. However, there are common components, principles, and considerations that are typically involved in constructing an AI pipeline architecture:

Data storage and management. AI pipelines demand a robust, scalable AI storage system to handle the massive volumes of data that AI projects need. This may involve databases, data lakes, data oceans, or other distributed AI storage systems. Data management techniques, such as data versioning, metadata management, and data governance, ensure data quality and traceability.

Data preprocessing and transformation. This component of the pipeline handles the preprocessing and transformation of raw data into a suitable format for model training. It includes tasks such as data cleaning, feature extraction, normalization, and dimensionality reduction. Tools and frameworks for data preprocessing are often used in this stage.

Model development and training. Building and training AI models involves selecting appropriate algorithms, architectures, and frameworks based on the problem and available resources. GPUs or specialized hardware accelerators may be utilized to speed up the training process.

Model evaluation and validation. Performance assessment of trained models using appropriate evaluation metrics and techniques involves using validation sets or cross-validation to estimate performance and compare different models or hyperparameters. Visualization tools and statistical analysis techniques may help users interpret the evaluation results.

Model deployment and serving. The trained and evaluated model needs to be deployed into a production environment where it can serve predictions or perform tasks in real-time. This involves setting up APIs, microservices, or serverless architectures to expose the model’s functionality. Containerization technologies like Docker and orchestration tools like Kubernetes are often used to manage the deployment process.

Monitoring and management. This involves monitoring performance, detecting anomalies or drift in data distributions, and handling retraining or updates as necessary. Logging, error tracking, and performance monitoring tools help in effective management of deployed models.

Collaboration and version control. AI pipelines often involve collaboration among team members, requiring version control systems and collaborative platforms that facilitate collaboration, code sharing, and reproducibility to manage code, data, and model artifacts.

Security and privacy. Security and privacy concerns relate to the data used, the models deployed, and interactions with users or external systems. Security measures such as access controls, encryption, and secure communication protocols are crucial to protect sensitive data and ensure compliance with privacy regulations.

The 4 Types of AI

There are four basic, different types of AI models that also represent incremental steps in AI technology development.

Reactive AI. Reactive AI systems are the most basic type, lacking memory and the ability to use past experiences for future decisions. Reactive machines can only respond to current inputs and do not possess any form of learning or autonomy. Examples of reactive AI include computers that play chess by analyzing the current board state to make the best move, or voice assistants that respond to user commands without any contextual understanding.

Limited memory AI. These systems make informed decisions based on a limited set of past experiences that they retain. These systems enhance their functionality by incorporating historical data and context. Self-driving cars often use limited memory AI to make driving decisions, considering recent observations such as the position of nearby vehicles, traffic signals, and road conditions.

Theory of mind. AI systems with a theory of mind possess an understanding of human emotions, beliefs, intentions, and thought processes. These systems can attribute mental states to others and predict their behavior based on those attributions. Theory of mind AI is still largely theoretical, but as an area of research it aims to enable machines to interact with humans more effectively by understanding their mental states and social cues.

Self-aware AI. AI systems that are truly self-aware with actual consciousness or the ability to recognize themselves and their own thoughts and emotions are as yet a highly speculative, unrealized concept, but they remain of philosophical and ethical interest and discussion.

Strong AI vs Weak AI

“Artificial intelligence” describes both the overall field and specific technologies or systems that exhibit intelligent behavior. In addition to the 4 types of AI, there are also three ways to think of AI: weak AI; strong AI; and super AI or artificial general intelligence.

What is weak AI? Weak or narrow AI systems are designed for particular tasks and specific problems within a limited domain. Some weak AI examples include voice assistants like Alexa or Siri, image recognition systems, recommendation algorithms, and chatbots.

What is strong AI? Strong or general AI systems possess the ability to understand, learn, and apply knowledge across various domains, essentially displaying human-level intelligence. True strong AI examples remain largely hypothetical for now.

What is Enterprise AI?

Enterprise AI refers to the application of AI technology and strategies in the enterprise context. AI for enterprise aims to enhance various aspects of business operations, decision-making processes, and customer interactions to gain competitive advantages and drive business outcomes.

AI in the enterprise context encompasses a broad range of applications and use cases across different industries. Here are some examples of how AI is applied in enterprise settings:

Automation and optimization. AI can automate and optimize repetitive and mundane tasks, such as data entry, document processing, or customer support inquiries. This can help businesses of any size increase operational efficiency, reduce costs, and free up human resources for more strategic or value-added activities.

Data-driven, powerful insights. AI enables organizations to analyze large volumes of structured and unstructured data to uncover patterns, trends, and insights. ML algorithms can be applied to data sets to identify correlations, predict outcomes, or detect anomalies, facilitating data-driven decision making and strategic planning.

Personalized user experience. AI-powered chatbots and virtual assistants deliver personalized, real-time customer support, answer inquiries, and assist with purchasing decisions. NLP and sentiment analysis techniques enable organizations to understand customer feedback, sentiment, and preferences, allowing for tailored marketing campaigns and improved customer satisfaction.

Predictive maintenance. AI can analyze sensor data from equipment to predict maintenance needs and optimize maintenance schedules. By detecting potential failures or anomalies in real-time, organizations can reduce downtime, minimize costly repairs, and improve overall equipment efficiency.

Supply chain optimization. AI can optimize supply chains by analyzing data from logistics, suppliers, demand forecasting, and other sources. AI algorithms can help businesses optimize inventory management, logistics routing, and demand forecasting, leading to cost savings, improved efficiency, and reduced stockouts.

Risk management and fraud detection. AI techniques such as machine learning and anomaly detection can be employed to identify and mitigate risks and detect fraudulent activities. AI models can analyze patterns and behaviors to flag suspicious transactions, insurance claims, or cybersecurity threats, enabling proactive risk management and fraud prevention.

Decision support. AI can support decision-making processes by providing insights and recommendations based on complex data analysis. AI-powered systems can assist business leaders with strategic planning, resource allocation, and identifying growth opportunities.

Enterprise AI solutions can also have some technical differences compared to AI applications in other domains:

Scalability and performance. Enterprise AI systems often deal with large volumes of data and complex computations. Scalability is crucial to management of massive data sets, increasing user demands, real-time or near real-time processing, distributed computing, parallel processing, and/or specialized hardware acceleration.

Integration with legacy systems. Enterprises typically have established IT infrastructures and legacy systems that AI solutions must seamlessly integrate with to leverage existing data sources, workflows, and business processes. This requires compatibility and interoperability with different data formats, databases, APIs, and software architectures.

Data privacy and security. Enterprises must handle sensitive data including customer information, financial data, and proprietary business data securely, comply with relevant regulations such as GDPR and HIPAA, and employ appropriate measures for data anonymization, encryption, access controls, and audit trails. Techniques like federated learning or differential privacy can protect sensitive data in AI models.

Transparency and interpretability. Enterprise AI demands transparency and interpretability, especially in regulated industries where users may be required to explain how an AI model arrived at a particular prediction or recommendation to secure regulatory compliance or user trust.

Compliance and ethics. Enterprises must comply with the law and ethical guidelines when deploying AI solutions. This includes ensuring fairness, avoiding bias, and preventing discrimination in AI models, as well as considering the ethical implications of policies for data usage, privacy, and human-machine interactions.

Collaboration and governance. Collaborative development, version control, and governance frameworks are important to effective management of AI projects. This includes establishing processes for code sharing, model versioning, documentation, and collaboration platforms. It is necessary to establish responsible governance practices for data management, model deployment, and ongoing monitoring to ensure compliance and accountability.

AI vs ML Explained

This is a broad topic with many intersecting subcategories that are often confused conversationally, yet there is an important difference between AI and ML.

What are the principal differences between AI vs machine learning (ML)?

Machine learning (ML), a subset of artificial intelligence, is the ability of computer systems to learn to make decisions and predictions from observations and data. Machine learning is used in many applications, including life sciences, financial services, and speech recognition.

ML involves the development of models and algorithms that allow for this learning. These models are trained on data, and by learning from this data, the machine learning model can generalize its understanding and make predictions or decisions on new, unseen data.

Machine learning algorithms can be categorized as supervised learning, unsupervised learning, semi-supervised learning, or reinforcement learning, based on its characteristics, goals, and approaches to learning from data.

Supervised learning is a type of ML model that learns from labeled data. In supervised learning, the training data includes input samples (features) and their corresponding desired output labels. The model learns to map inputs to outputs based on the labeled examples and can make predictions on unseen data.

Unsupervised learning is a type of ML model that learns from unlabeled data. In unsupervised learning, the training data does not have explicit output labels. The model learns to find patterns, structures, or relationships in the data without specific guidance. Clustering and dimensionality reduction are common tasks in unsupervised learning.

Semi-supervised learning is a hybrid of the previous machine learning methods. This approach provides the learning algorithm with unstructured (unsupervised) data while it includes a smaller portion of labeled or structured (supervised) training data. This often supports more rapid and effective learning on the part of the algorithm.

Often used for agents within a simulated environment (for example, an artificial agent in a video game), the reinforcement learning approach leverages the concept of cumulative award and Markov decision chains to teach optimal actions in dynamic settings. It is often used in online games and non-gaming environments like swarm intelligence modeling, simulations, and genetic-modeling algorithms.

So, what about the deep learning from the discussion at the beginning of this guide? There are various differences and connections between AI vs machine learning vs deep learning.

AI as a broader field is focused on all intelligent systems that can simulate human intelligence, understand, learn, and perform tasks. It encompasses various subfields, including computer vision, machine learning, and others.

Deep learning is a subfield of ML that focuses on the development and training of neural networks with multiple layers. Deep learning models, called deep neural networks, are designed to automatically learn hierarchical representations of data. They excel in tasks such as image and speech recognition, natural language processing, and recommendation systems.

There are several other areas to be aware of, as well.

Large Language Models

Large language models are complex, sophisticated AI models designed to understand and generate human language. They are trained on vast amounts of text data and utilize deep learning techniques, such as neural networks, to process and generate language-based tasks including text generation, translation, summarization, question-answering, and sentiment analysis. They learn to understand the intricacies of grammar, semantics, and context through exposure to massive amounts of text from diverse sources.

These models have revolutionized natural language processing (NLP) tasks. One notable example of a large language model is OpenAI’s GPT (Generative Pre-trained Transformer) series. They have wide-ranging applications and can assist with automated customer support, content generation, enhanced chatbots, language translation, research, and advanced natural language understanding and human-computer interaction.

Neural Networks

Neural networks are computational models inspired by the human brain’s structure and function. They are a fundamental component of deep learning, a subfield of machine learning. Neural networks are made up of interconnected artificial neurons or nodes, organized into layers.

The power of neural networks lies in their ability to glean complex patterns and representations from data. Neural networks adjust their weights during training based on example input-output pairs or a loss function that measures the discrepancy between predicted and desired outputs.

Training typically involves optimization algorithms to iteratively update the weights and minimize error. Neural networks are increasingly popular due to their ability to adapt to diverse data types, and achieve advanced speech recognition, image classification, and natural language understanding.

Conversational AI

Conversational AI is the set of technologies and systems that enable computers or machines to engage in human-like conversations with users. It combines natural language processing (NLP), natural language understanding (NLU), dialog management, speech recognition and synthesis, and natural language generation (NLG) techniques to facilitate communication between humans and machines in a human-like, conversational manner. Conversational AI systems can be deployed as virtual assistants, chatbots, voice assistants, customer support systems, and more.

Wide Learning

Wide learning is a ML approach that combines deep learning and traditional feature engineering techniques. Wide learning models use deep learning to capture deep semantic relationships and feature engineering to capture wide feature interactions. This combination enables improved modeling of complex relationships in large-scale, sparse datasets.

What is Driving AI Adoption?

AI adoption is being driven by several factors that contribute to its growing popularity and implementation across various industries. Some key drivers of AI adoption include:

Increasing availability of data. The digital era has led to an explosion of data generation, making vast amounts of structured and unstructured data available for analysis. AI systems thrive on data, and the availability of large datasets enables more accurate and robust AI models.

Advancements in computing power. Rapid advancements in computing power, including the development of specialized hardware like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), have significantly enhanced the ability to train and deploy complex AI models. High-performance computing allows for faster processing, enabling AI algorithms to handle large-scale datasets and complex computations.

Improved ML techniques. More advanced ML algorithms, model architectures, and training techniques like deep learning, reinforcement learning, and transfer learning have improved the accuracy and performance of AI models across various domains, driving their adoption.

Cloud computing and infrastructure. Cloud computing platforms offer scalable and cost-effective infrastructure for hosting and running AI applications. Cloud providers offer pre-built AI services, APIs, and infrastructure, making it easier to leverage AI capabilities without costly upfront investments in hardware and software.

Industry-specific applications and use cases. As AI demonstrates its potential across a wide range of industries and use cases, from healthcare and finance to retail and manufacturing, it drives innovation, improves customer experiences, and helps users gain a competitive advantage.

Cost and efficiency. AI can automate repetitive and time-consuming tasks, reducing costs and increasing operational efficiency. By automating processes, organizations can reduce manual errors, streamline workflows, and allocate resources more effectively, and this is driving the adoption of AI technologies.

Enhanced insight. AI systems can analyze vast amounts of data, identify patterns, and generate valuable insights to support decision-making processes. By leveraging AI technologies, businesses can make data-driven decisions, improve forecasting, and gain a deeper understanding of customer behavior, market trends, and operational performance.

Regulatory and ethical considerations. An evolving regulatory landscape and ethical considerations are playing a role in AI adoption. Organizations and companies are recognizing the importance of responsible AI practices, ensuring compliance with regulations, addressing bias, and maintaining transparency and fairness in AI applications.

Why is AI Important?

Artificial intelligence is already here, so its advantages and disadvantages make the case for its importance. The advantages of AI include:

- Automation, cost. Because AI automates repetitive and mundane tasks, it is generally much faster than humans, reducing the time, effort, and money required for various tasks.

- Informed decision-making. Complex big data and AI techniques such as data mining, machine learning and deep learning enable organizations to extract valuable insights from large and complex datasets in support of evidence-based decisions, reducing human bias.

- Enhanced accuracy. AI algorithms can achieve high levels of accuracy in many tasks and can process and analyze data with precision, improving the quality of results.

- 24/7 availability. AI systems can operate 24/7 without human needs for tasks such as customer support, monitoring, or data processing.

- Personalization and U/X. AI can reference user preferences and behaviors to provide personalized experiences, and enable recommendation systems, targeted advertising, and customized interactions, to enhance user satisfaction and engagement.

The disadvantages of AI include:

- High initial investment. Developing and implementing AI technologies can require substantial financial investment in infrastructure, computational resources, and skilled personnel.

- Job displacement and workforce changes. AI automation may displace certain jobs as tasks become automated, requiring reskilling or upskilling of the workforce.

- Ethical and privacy concerns. AI raises ethical concerns related to privacy, security, and bias. AI systems rely on training data which may be biased or incomplete, potentially reinforcing existing prejudices.

- Reliability. Organizations relying heavily on AI systems may face challenges if those systems fail or encounter errors. Reliance on AI can make businesses vulnerable to disruptions, technical glitches, or malicious attacks.

- Lack of human-like understanding. Despite advancements, AI systems still lack comprehensive contextual understanding and may struggle with sarcasm or nuances in language, limiting their ability to handle certain tasks.

- Lack of creativity and intuition. AI systems excel at tasks that can be defined by rules and patterns, but they often lack human-like creativity, intuition, and ability to think outside defined parameters. They struggle with tasks that require complex problem-solving or the ability to generate truly original ideas.

The importance of AI is essentially already established in its potential to transform industries, improve efficiency, and enable innovation. However, as with any powerful technology, it also brings challenges and considerations that need to be addressed, such as ethical implications, privacy concerns, and the impact on the workforce.

AI Governance and Regulations

As of 2023, the United States does not have comprehensive federal AI regulations, although in 2020 guidance was provided for the heads of federal agencies and departments. However, there have been discussions, initiatives, and proposals related to AI governance and regulation at both the federal and state levels.

See, for example:

- The National Telecommunications and Information Administration, a Commerce Department agency that advises the White House on telecommunications and information policy, has begun to seek public comments on potential accountability measures for AI systems.

- The OSTP has also proposed an AI Bill of Rights, found here.

- Previously in 2019, the White House issued the Executive Order on Maintaining American Leadership in Artificial Intelligence, which directed federal agencies to prioritize AI investments, promote AI research and development, and enhance AI education and workforce development.

- The National Institute of Standards and Technology (NIST) has been actively involved in developing standards and guidelines for AI. They have published documents such as the NIST Special Publication 800-209 on the trustworthiness of AI systems and the NIST AI Risk Management Framework to address the challenges of managing AI risks.

- Additionally, the Federal Trade Commission (FTC) has been focused on addressing consumer protection and privacy concerns related to AI. They have issued guidelines and reports emphasizing the need for transparency, fairness, and accountability in AI systems, especially regarding bias, discrimination, and data protection.

- California passed the California Consumer Privacy Act (CCPA) and the California Privacy Rights Act (CPRA), which include provisions related to AI regulation and data privacy.

What is Generative AI?

Generative AI is a branch of AI that focuses on the creation and generation of new content, such as images, text, music, or other forms of creative output. It involves training AI models to learn from existing data and then generate new, creative content that is similar.

Generative AI models are designed to understand patterns and structures within the training data and use that knowledge to produce novel, coherent outputs. These models can generate content that closely resembles the examples they were trained on or create entirely new and original content based on the learned patterns.

There are several kinds of generative AI models:

Generative Adversarial Networks (GANs). GANs consist of two neural networks, a generator and a discriminator, which are trained in tandem. The generator network learns to produce realistic outputs, while the discriminator network learns to distinguish between real and generated examples. Through an adversarial training process, GANs can generate realistic images, videos, and other content.

Variational Autoencoders (VAEs). VAEs are probabilistic models that learn a compressed representation (latent space) of input data. They can generate new data by sampling from the learned latent space and then reconstructing it back into the original data space. VAEs are commonly used for generating images, text, and other structured data.

Recurrent Neural Networks (RNNs). RNNs are networks that are suited well for sequential data, such as text or music. They can learn the patterns and dependencies within the training data and generate new sequences based on that knowledge. RNNs are often used in natural language generation and music composition.

Generative AI Examples

Generative AI use cases exist now in various fields:

- Art and design. Generative AI can create new visual art, logos and other designs, and more.

- AI in entertainment and music. This kind of AI has already created songs in the style of some artists.

- Marketing, advertising, and content creation. This type of tool can create fictional characters, write stories, and help in creating personalized marketing campaigns and advertisements, targeting specific demographics.

- Gaming. Generative AI can create new levels, characters, and scenarios in games, making them more engaging and interactive.

- Medicine and research. This technology can even assist in drug discovery by generating novel chemical structures.

- Fashion and retail. Generative AI can create new clothing designs and predict fashion trends.

- Technology. These tools can help write documentation and code projects.

- Customer service. Generative AI can handle customer and internal queries when trained on knowledge bases.

However, concerns surrounding generative AI include ethical considerations, such as the potential for generating misleading or harmful content, deepfakes, or the unintentional biases present in the training data being reflected in the generated output.

What is Sustainable AI?

There is no doubt that AI has enormous potential to help humankind. But without sustainable AI practices, we can expect the world’s data centers to consume more energy annually than the entire human workforce combined.

At Weka, we’ve seen that AI isn’t really the problem—it’s inefficient traditional data infrastructure and management approaches that are mostly to blame.

Sustainable AI is the development, deployment, and use of AI in a manner that promotes environmental sustainability, social responsibility, and long-term ethical considerations. It encompasses practices and principles aimed at minimizing the negative impacts of AI on the environment, society, and the economy while maximizing its positive contributions.

Key aspects of AI sustainability include:

Energy efficiency. AI models and algorithms can be computationally intensive and require significant energy consumption. Sustainable AI focuses on developing energy-efficient algorithms and optimizing hardware infrastructure to reduce the environmental impact of AI systems. This includes exploring techniques like model compression, quantization, and efficient hardware architectures.

Rethinking the data stack. In the Ai and cloud era, the enterprise data stack must be software-defined and architected for the hybrid cloud. To harness HPC, AI, ML, and other next-generation workloads, it must run seamlessly anywhere data lives, is generated, or needs to go—on-premises, in the cloud, at the edge, or in hybrid and multi-cloud environments.

Responsible data usage, sustainable AI storage. Sustainable AI emphasizes responsible and ethical handling of data. It involves ensuring proper data privacy and protection, obtaining informed consent, and avoiding the use of biased or discriminatory data. Sustainable AI frameworks promote transparency, fairness, and accountability in data collection, storage, and utilization.

Ethical, transparent AI. Sustainable AI integrates ethical considerations into the design and development of AI systems to be more just, transparent, and accountable. Implementing ethical guidelines and regulatory frameworks ensures that AI technologies align with societal values and do not detrimentally impact individuals or communities.

Social impact. Sustainable AI leverages AI for positive social impact. This includes using AI to address societal challenges, improve access to services, promote inclusivity, and bridge digital divides. Sustainable AI frameworks prioritize applications that benefit marginalized communities, healthcare, education, environmental conservation, and other areas of social significance.

Continuous learning and adaptability. Sustainable AI promotes the development of AI systems that continuously learn and adapt to changing circumstances. This involves building AI models that evolve and update based on new data and feedback, ensuring that they remain effective, accurate, and aligned with evolving needs and values.

Collaboration and partnerships. Sustainable AI initiatives foster collaboration among various stakeholders, including researchers, policymakers, industry, civil society organizations, and the public. Collective efforts develop more sustainable guidelines, standards, and policies that guide more responsible development and deployment of AI technologies.

Learn more about Weka’s sustainability initiative here.

Applications of AI

Much of what was once confined to the realm of science fiction is now within reach of real world AI application. There are some prominent AI technology examples in most major fields today.

AI in Healthcare

AI applications in healthcare have shown significant potential in improving diagnosis, treatment, patient care, and healthcare management. Here are some examples:

Medical imaging analysis. AI algorithms can analyze medical images, such as CT scans, X-rays, and MRIs, to assist in the detection and diagnosis of various conditions. Deep learning models have been developed to accurately detect abnormalities and assist radiologists in identifying diseases like cancer, cardiovascular conditions, and neurological disorders.

Clinical decision support systems. AI can provide decision support to healthcare professionals by analyzing patient data, medical records, and relevant literature, offering personalized treatment recommendations, alerting clinicians to potential drug interactions or adverse events, and offering appropriate treatment plans.

Disease prediction and risk assessment. AI algorithms can analyze patient data, including electronic health records (EHRs) and genetic information, to identify patterns and predict the risk of certain diseases. This can aid in early detection, preventive interventions, and personalized treatment plans.

Virtual health assistants. AI-powered virtual assistants and chatbots can interact with patients, answer common health-related questions, provide basic medical advice, and offer support for mental health issues. They can triage patient symptoms, provide self-care recommendations, and direct individuals to appropriate healthcare services.

Remote patient monitoring. AI-enabled wearable devices and sensors can continuously monitor vital signs, activity levels, and other health parameters of patients with chronic conditions, enabling early detection of health deteriorations, and timely interventions.

Precision medicine. Precision medicine can tailor treatment plans to patients based on their genetic makeup, medical history, and other relevant factors. It helps identify patient subgroups, predict treatment response, and optimize personalized therapies.

Administrative and operational efficiency. AI can streamline administrative tasks and improve operational efficiency, automating scheduling, billing, and documentation processes, so healthcare professionals can focus on patient care.

These are just a few examples of AI use cases in healthcare.

AI in Financial Services and Banking

There are numerous applications for AI in banking and financial services:

Fraud detection and prevention. AI algorithms can analyze large volumes of transactional data in real-time to detect fraudulent activities. ML models can learn patterns of fraudulent behavior and flag suspicious transactions, helping banks prevent financial losses, learn how to stop account takeover, and enhance security measures.

Customer service and chatbots. AI- and NLP-powered chatbots and virtual assistants can provide personalized customer service and support. They can handle routine customer inquiries, assist with account inquiries, provide product recommendations, and guide customers through various banking processes.

Credit scoring and risk assessment. AI models and ML algorithms can analyze vast amounts of customer data, credit history, and financial information to assess creditworthiness and determine risk profiles, predict default probabilities, evaluate loan applications, and automate the credit underwriting process, allowing banks to make faster and more accurate credit decisions without bias.

Anti-money laundering (AML). AI technologies can assist in detecting money laundering activities by analyzing transactional data and identifying suspicious patterns. AI models can identify high-risk transactions, monitor customer behavior, and generate alerts for further investigation, helping banks comply with regulatory requirements and combat financial crimes.

Personalized banking and recommendations. AI algorithms can analyze customer data, transaction history, and browsing patterns to provide personalized banking experiences. AI-powered systems can offer tailored product recommendations, investment advice, and financial planning suggestions based on individual customer preferences and financial goals.

Trading and investment. AI-powered algorithms can analyze vast amounts of market data, news articles, and social media sentiments to generate investment insights and inform trading decisions. AI-driven trading systems can identify patterns, execute trades, and optimize investment portfolios based on predefined strategies.

Process automation. AI can automate repetitive and manual processes in banking, such as data entry, document verification, and customer onboarding. Robotic Process Automation (RPA) can streamline back-office operations, reduce errors, and enhance operational efficiency.

AI in Government

AI can enhance government decision-making, improve public services, and streamline operations in multiple ways:

Data analysis and insights. Governments generate vast amounts of data, and AI can help them extract valuable insights from it to identify patterns, trends, and correlations, enabling policymakers to make data-driven decisions and develop evidence-based policies.

Smart cities and urban planning. AI can be used to optimize urban infrastructure, transportation systems, and energy usage in smart cities. AI algorithms can analyze real-time data from sensors and devices to optimize traffic flow, manage energy consumption, and improve the overall quality of life in urban areas.

Public safety and security. AI technologies such as facial recognition systems can enhance public safety and security efforts like identifying suspects or missing persons. Predictive analytics can help law enforcement agencies allocate resources more effectively to prevent and respond to crime. AI-powered systems can also assist in analyzing social media and online platforms for early detection of security threats.

Citizen services and chatbots. Governments provide round-the-clock assistance to citizens with the help of AI-powered chatbots and virtual assistants that address citizen inquiries, provide information about government services, and guide users through various processes, such as applying for permits or accessing public resources.

Fraud detection and tax compliance. AI can assist government agencies in detecting fraud, non-compliance, and improving tax compliance and collection.

Natural language processing and document analysis. Governments handle vast amounts of documents and records. AI-powered natural language processing (NLP) can facilitate document analysis, automate information extraction, and enable efficient information search and retrieval from unstructured data sources.

Emergency response and disaster management. Predictive models and AI algorithms can analyze data from various sources, such as weather patterns, historical incidents, and social media, to predict and assess potential risks in support of emergency response and disaster management efforts. AI can also assist in resource allocation, emergency planning, and real-time situational awareness during crises.

Administrative efficiency. AI technologies, including robotic process automation (RPA), can automate administrative tasks, streamline workflows, and reduce manual errors in government processes. This allows government agencies to allocate resources more efficiently and focus on higher-value tasks.

AI in Life Sciences

AI plays a significant role in the life sciences:

Drug discovery and development. AI accelerates drug discovery; ML models can analyze vast amounts of biological and chemical data to identify potential drug candidates, predict their efficacy, optimize molecular structures, and simulate drug-target interactions. AI algorithms enable the screening of large chemical libraries and help researchers prioritize and design experiments more efficiently.

Genomics and bioinformatics. In genomics research and bioinformatics, ML algorithms can analyze DNA and RNA sequences, identify genetic variations, predict protein structures and functions, and assist in understanding the genetic basis of diseases. AI techniques are also used in comparative genomics, protein folding, and predicting drug-target interactions.

Biomarker discovery. AI algorithms can analyze large-scale biological data, such as omics data (genomics, proteomics, metabolomics), to identify biomarkers that can be used for disease diagnosis, prognosis, and monitoring treatment response. AI helps uncover complex relationships between biomarkers and disease states that may not be apparent through traditional analysis methods.

Drug repurposing. AI techniques can identify new therapeutic uses for existing drugs by mining large datasets of molecular information, clinical records, and published literature. By analyzing drug properties, molecular pathways, and disease characteristics, AI can suggest potential drug candidates for repurposing and accelerate the development of new treatments.

Scientific research and literature analysis. AI assists researchers in scientific literature analysis by extracting relevant information, identifying relationships between articles, and summarizing vast amounts of scientific literature. This keeps researchers up-to-date with the latest findings, and helps them discover new insights and generate hypotheses.

AI in Media and Entertainment

AI is transforming the media and entertainment industries, enhancing content creation, personalization, recommendation systems, and audience engagement:

Content generation. AI technologies can generate automated reports, news articles, and summaries. Natural language processing (NLP) models can analyze data, extract relevant information, and generate written content. AI algorithms can also generate music, artwork, and video content.

Video and image analysis. AI algorithms can analyze videos and images to recognize objects, scenes, and faces. This enables automated video tagging, content moderation, and indexing. AI-powered video analysis can assist in video editing, content segmentation, and personalized video recommendations.

Recommendation systems. AI-powered recommendation engines leverage ML algorithms to analyze user behavior, preferences, and historical data to provide personalized content recommendations. This helps users discover relevant movies, music, TV shows, articles, and other media content.

Content curation and personalization. AI personalizes content curation by analyzing user preferences, demographics, and engagement patterns. By understanding individual interests, AI algorithms can deliver personalized content feeds, newsletters, and targeted advertisements, enhancing the user experience and engagement.

Enhanced user interfaces. AI technologies, such as NLP and computer vision, can enhance user interfaces and interactions in media and entertainment. Voice assistants, chatbots, and virtual reality applications are empowered by AI to provide immersive and intuitive user experiences.

Audience insights and analytics. AI-powered analytics platforms can process large volumes of data from social media, online platforms, and user interactions to generate audience insights. Media companies can use these insights to understand audience preferences, behavior, sentiment, and engagement patterns, enabling them to make informed decisions about content creation, marketing strategies, and audience targeting.

Content moderation. AI can aid in content moderation by automatically identifying and flagging inappropriate or objectionable content such as hate speech, explicit images, or copyright violations. AI-powered systems can help maintain content quality and ensure compliance with community guidelines and regulatory standards.

Live streaming and real-time analytics. AI technologies enable real-time analytics and monitoring of live events, streaming platforms, and social media discussions, providing insights into audience sentiment, engagement levels, and real-time feedback. This helps media companies and broadcasters adjust their content, coverage, and programming based on audience reactions and interests.

The Future of AI. What’s Next?

Given that there has been such rapid change in the field recently, what is the future of AI right now? In general, AI is becoming more and more like the human brain, and less constrained in traditional ways.

The most obvious changes we are likely to see in the coming years will affect us as a few broader trends:

- Pace of culture and life ticks up. As massive organizations make data-driven decisions ever more rapidly, their cultural organizations will be more nimble. Those kinds of larger shifts toward a faster pace in life generally can push the rest of us to live a little more quickly, too.

- Less privacy, enhanced security. We’ve already seen a trend toward reduced privacy, especially online. The cost of enhanced convenience and speed is, at times, less privacy—at least from your friendly U/X bot. But enhanced security, especially for data, will be paramount for user trust to be sustained in these platforms.

- The law of AI will develop. 2023 has seen federal hearings on what the law surrounding AI should be, and the law in this area is poised to develop rapidly alongside internet law generally.

- Human/AI interface. This is coming already, as researchers experiment with restoring the ability to move or speak for people with severe disabilities.

- Continued demands for sustainability. The need to conserve energy and find sustainable solutions for data management and storage will only grow more urgent in the future, and rapidly as technology evolves.

What is Storage for AI?

One of the key challenges to achieving sustainable AI is storage. IT infrastructure is in a state of continuous change, but rethinking storage for AI/ML workloads can help ease this process.

Data enters the ML pipeline at each stage; which kind of AI storage system is best suited to handle it?

- Ingestion. During the data- and capacity-intensive ingest stage, the data is collected and placed into data lakes, where it needs to be classified and sometimes needs to be cleaned.

- Preparation. AI and ML demand extremely high-throughput operations, so the data preparation phase requires write speed to support many sequential writes.

- Training. The resource-intensive training of the AI/ML pipeline is a latency-sensitive process rather than a data-intensive process, with high influence on the ultimate time to result for the project.

- Inference. In this stage you need extremely low latency. Maybe you have smaller IO and mixed workloads, but extremely low latency is what’s important here.

- Staging and archive. AI/ML demands a large amount of data to test and run its models—a multi-petabyte scale of active archive might be required at this stage.

The challenge is the type of AI storage system that is best-suited to the data at hand.

There are six aspects of AI/ML infrastructures consider:

Portability

AI/ML practitioners generally need the ability to deliver storage anywhere. In a modern environment, data and storage can be anywhere—yet many storage solutions are still designed with a single data center in mind. Data might still be deployed within a data center, but some data storage also will be deployed at the edge, in the cloud, or elsewhere.

Interoperability

AI/ML workloads must support modern libraries so they are not stuck in a silo. An AI/ML project should be deployed alongside and sub-provisioned by developers or business owners and must be compatible with provisioning tools. It must learn all of the APIs and handle the workload—in self-service mode, as well. Finally, the architecture must support container platforms, mostly Kubernetes-oriented applications.

Scalability

Scale is important, especially for unstructured data. However, architecture also needs to change, because while scaling up is very important, so is scaling down.

Many projects start small and grow as needed, so there is no need to invest in a multi-petabyte solution or expand beyond a small footprint—and some types of storage don’t scale in the right ways for AI. Network Attached Storage (NAS), for example, is a vertical platform that cannot scale out to the sizes and in the distributed, horizontal ways required for AI/ML workloads.

Performance

AI/ML workloads demand the highest possible performance. Workloads with high-throughput, large IO, and random reads and writes must coexist harmoniously and with low latency. This requires new protocols, new flash media, new software, and GPUs for the training phase of AI/ML. The biggest weak spot in this chain is feeding enough data into the system; keeping storage full of ready-to-use data is the only way to achieve this.

Software-defined models

In terms of portability, an AI model needs to be deployed anywhere using software-defined architecture that is appliance independent yet supports modern hardware innovations. For example, software-defined-storage vendors were the first supporters of NVMe technology, and just as their software independence made them good partners then, they remain ideal for people who need to deploy AI/ML workloads for data management anywhere across the edge, core, and cloud.

Cost optimization

To right-size AI storage investment for AI/ML, it’s critical to plan beyond training and inference, the most demanding phases of the project, and through the entire data lifecycle of storage. Deploying all data on a single platform becomes prohibitively large and expensive, but planning with all phases of the work in mind, including active archiving and deep archiving, allows practitioners to maximize their TCO for the entire AI/ML process for less money.

Solve your AI/ML Data Challenges with WEKA

AI has the potential to revolutionize how we interact with computers, but the key to unlocking that full potential lies in making efficient use of high-quality, diverse training data. The large amount of data to train models effectively, as well as acquiring, storing, and processing this data can be a challenge. The cost of storage and the time spent managing it are significant factors as the size of the data set grows. Powering complex AI pipelines with WEKA’s data platform for AI streamlines file systems, combining multiple sources into a single high-performance computing system.

With WEKA, you get the following benefits:

- The performance you need to reduce epoch times for I/O Intensive workloads

- The industry’s Best GPUDirect Performance delivery up to 162GB/sec for a single DGX-A100

- Seamless, non-disruptive scaling from Tb’s to 10’s Eb, as well as performant scaling from a small number of large data files to billions of small files

- Deploy on the hardware of you choice on premises, migrate you demanding workloads to the cloud with WEKA, or enable seamless cloud bursting as needed

Contact our team of experts to learn more about WEKA and how we can support your AL/ML projects.