Accelerating Containers with a Kubernetes CSI Parallel File System

As full enterprise workloads move to the cloud, there is a need for application developers to quickly and seamlessly use their new or updated applications in a lightweight manner, and containers have become the go-to technology. Containerization of applications is a packaging mechanism that abstracts the application from the underlying server hardware and enables the fast deployment of new features. Containers isolate one application from other applications while allowing each application to have full access to all of the necessary system resources. Using containers is in some ways similar to creating a wholly virtualized environment but is much more lightweight. This concept and implementation of containers allow for cloud-native applications to be quickly delivered to customers.

Google is a pioneer in the development of containers. As a leader in the internet and cloud technologies, Google needed to come up with a way to manage the deployment of many thousands of containers at one time. The Google product, which is open-sourced, is now known as the Kubernetes project. Today, a significant percentage of all software deployed to public clouds use containers, and many use Kubernetes as the orchestration software.

Learn Various Application Deployment Models

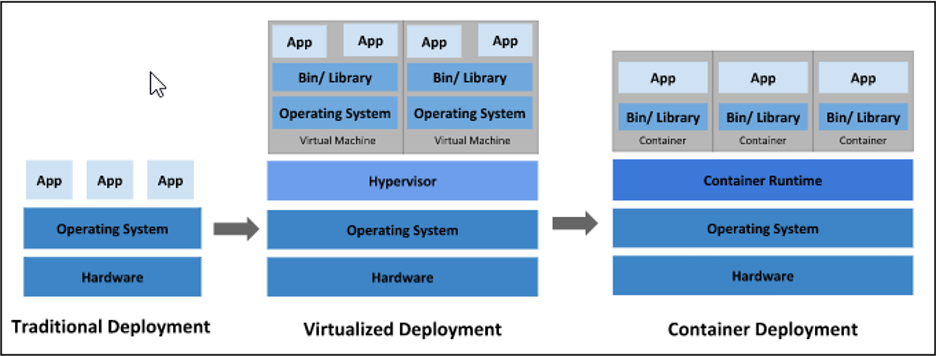

As Figure 1 below shows, there are several options for deploying applications. In the past, an on-premises datacenter or a bare-metal cloud environment would typically dedicate a new server to a specific set of applications. The Operating System (OS) would be set up, the application(s) installed, and once certified, would rarely be touched. This often resulted in an infrastructure imbalance for demanding applications or unused resources if the installed applications did not use the underlying hardware resources to their fullest.

Virtualized deployments sought to overcome the issue of resource imbalance and under-utilization. By allowing for full guest OS’s to be installed on top of a host OS, an entirely different operating environment could share the same resources. However, a complete guest OS installation was very “heavy”, in that a full OS had to be installed. Although the underlying resources could now be utilized at a higher level, the distribution and management of full OS’s were cumbersome and resource-heavy.

Containers, as shown below, are “packaged” solutions which only contain what is needed for an application to run. This would include runtime libraries and any supporting software that is required. As can be seen below, there are no guest OS’s that need to be installed, and the Container Runtime manages the communication, and other resource needs to the base OS. The applications share a single Operating System and are referred to as “lightweight”, and the result is much higher performance than can be achieved with virtual machines.

By design, a container is stateless, which means that when the container is removed, the data associated with that specific container disappears as well. This creates a challenge in that critical information or data, whether in memory or on a storage device, will not be available for future use, which can be a significant issue. Since containers are lightweight, creating a new one and spinning it up is quite fast and straightforward. New containers that are needed for a new service or more distribution of the workload can quickly be implemented, and the results are apparent immediately.

Many applications have relied on using local storage and a local file system that is installed on the application server and will be accessed through a container runtime. However, as applications become more distributed and the data sets grow beyond local storage, it has placed increased demands on the I/O system; a container that includes a persistent file storage system is needed that can respond to application requirements. Persistent storage, where the data is always available, even when a container is moved or removed, is critical for a distributed application environment. As a container is removed, the data must remain available to other applications and cannot be destroyed.

There are several popular formats for containers, with Docker leading the way according to some estimates. However, the management of containers, such as creation, replication, and deletion, requires a management interface, and the most popular today is Kubernetes, sometimes abbreviated as K8s. K8s is widely available and has been open-sourced by Google. Kubernetes is a framework for managing containers that an application requires or that a set of applications need to work together.

What is a Container Storage Interface?

A container storage interface (CSI) enables storage vendors to create a plugin for adding or removing volumes of storage across different container orchestration systems. CSI has been promoted to GA status with the Kubernetes V1.13 release. CSI allows developers and storage vendors to expose their file systems, whatever these may be, to the applications running in a container. Thus, storage becomes much more extensible and allows innovative storage products to be made more widely available. By using CSI, for example, a highly performant parallel file system or PFS can quickly be used by an application, improving the performance of a range of applications that require high-speed input and output for large and distributed applications.

Benefits of using Container-as-a-Service

A new and exciting offering that can be made available to cloud computing customers is Container-as-a-Service or CaaS. A CaaS contains all of the necessary components and management services to start, organize, scale, execute, replace, and stop containers. An essential addition to a CaaS offering is to include packaged I/O volumes through the CSI.

Cloud computing offerings are generally identified as Infrastructure-as-a-Server (IaaS), Platform-as-a-Service (PaaS), or Software-as-a-Service (SaaS). CaaS is most commonly positioned as part of an IaaS offering.

Some of the benefits of using a CaaS include:

- Reduction in operating expense – End users only pay for what is currently consumed, which includes the compute, the storage capacity, and the I/O portion of the application.

- Scaling up or down as needed – As workloads change, the customer can scale resources either up or down with CaaS.

- Developers can respond quickly – By using a CaaS, developers can quickly deploy new or updated software, which includes new application features or new storage options.

Innovative applications need to be able to incorporate the latest developments that users demand. By combining new storage capabilities through the Kubernetes CSI plugin, applications can share data across a range of cloud providers with pre-determined security features enabled. A variety of industries will quickly benefit from Kubernetes CSI plugins, such as High-Performance Computing applications, Artificial Intelligence, Machine Learning, and databases. Persistent storage that is fast and easily accessible by multiple containers running applications, whether in serial or simultaneously, will allow new applications that require massive amounts of data to be ingested and acted upon. By aligning with the popular Kubernetes operator, vendors that offer CSI plugins can expand their customer base. Whether implemented on-premises, in a public cloud, or as part of a hybrid cloud solution, this new and exciting software development simplifies cloud-native applications. As container-based software becomes the norm for easy deployment and workload scaling, using a CSI plugin is the way to go for getting the most out of a cloud strategy, while reducing costs.

Deploying Containerized Applications Using CSI Kubernetes Plug-in

In this webinar, you will learn about container eco-system and Kubernetes orchestration, architecture requirements of high-performance stateful applications at scale.

WekaIO has introduced the Kubernetes CSI plugin, which allows its customers to deliver CaaS functionality, which utilizes the Weka file system. The WekaFS Kubernetes CSI plugin address many of the challenges that innovative software developers and their customers face, such as:

- Support for stateful and stateless applications

- Shareability of data – a single data set can be shared across many containers

- Scalability of data – ability to scale to Exabytes of storage in a single namespace

- Performance of storing or retrieving data – low-latency NVMe performance across a shared file system

- Portability across various cloud systems, including multi-clouds, hybrid-clouds, and on-premise depolyments – continer mobility with Weka’s cloud bursting capability

The benefits that the WekaFS Kubernetes CSI plugin bring to all types of organizations include:

- Flexible deployment options

- Faster decision making based on more rapid access to data

- Confidence that their valuable data is always available by using persistent storage

Many applications are stateful, meaning that the data must remain available even if the application stops executing. Also, many applications are performance-sensitive and use local SSDs to achieve low latency access for critical data. However, this creates an issue if containers are the method of deployment. If a container goes down, then the data that is stored in that container will be lost as well. A persistent storage mechanism that delivers data from SSDs (even faster than local access) across a pod of servers and works within the container system is required for leading-edge applications. A parallel file system that uses a global namespace, working within a container-based environment, will lead the way for stateful applications that require fast access to massive amounts of data.

Some examples of stateful applications include:

- Single instance databases: MySQL, Postgress, MariaDB

- NoSQL databases: Cassandra, MongoDB

- Data processing and AI/ML: Hadoop, Spark, Tensorflow, PyTorch, Kubeflow, NGC, etc.

- In-memory databases: Redis, MemSQL

- Messaging: Kafka

- Business applications

In summary, the WekaIO CSI plugin for Kubernetes is a leap forward in providing the features and performance that customers need, and is simple and easy to deploy. By integrating with the Kubernetes orchestration system, customers will see many benefits and be able to make faster and better decisions across a range of applications.

More information about the WekaIO Kubernetes CSI plugin is available at https://kubernetes-csi.github.io/docs/drivers.html