NVIDIA DGX H100: How it Works and More

What is NVIDIA DGX H100?

NVIDIA DGX H100 high-performance computing (HPC) infrastructure allows data centers and research labs to train, deploy, and run artificial intelligence (AI) workloads and machine learning (ML) models more rapidly. This in turn enables more efficient large-scale data processing.

Here’s a breakdown of the key features of NVIDIA DGX H100:

- Powered by H100 GPUs. Part of the Hopper architecture, the NVIDIA H100 Tensor Core GPU serves as the foundation of NVIDIA DGX. These GPUs are optimized to support training and inference as well as other AI workloads and deep learning tasks relative to previous generations like the A100.

- Networking and connectivity. High-speed networking Quantum InfiniBand technology allows multiple DGX H100 systems to work together as a cluster, accelerating workloads by distributing tasks across machines seamlessly.

- Memory. DGX H100 systems feature the large GPU memory and bandwidth essential for handling large datasets in AI model training and inference.

- Software ecosystem. DGX H100 systems are integrated with the NVIDIA AI Enterprise software suite which includes AI model training tools, deep learning frameworks, and GPU-optimized applications for HPC.

- Data center-ready. The DGX H100 is designed with hardware and software tools that allow it to be deployed at scale, supporting multi-node configurations for large-scale AI research and production.

- High-speed scalability. As an AI infrastructure solution, multiple NVIDIA DGX H100 systems can be connected, suiting them for enterprises that require large-scale AI research, including advanced fields like natural language processing (NLP), computer vision, and generative AI models

DGX H100 Architecture Explained

NVIDIA DGX H100 architecture and DGX SuperPOD with H100 systems are both designed for high-performance AI and ML workloads, but they operate at different scales and are tailored to meet distinct requirements:

DGX H100 reference architecture consists of several key components:

- Each DGX H100 system includes 8 NVIDIA H100 Tensor Core GPUs. These provide significant acceleration for matrix operations, commonly used in neural networks; support for FP8 precision (for faster AI training and inference); and MIG (Multi-Instance GPU) technology, which allows partitioning each GPU into smaller units for multi-tenant use.

- NVLink switch system. DGX H100 systems are interconnected by NVLink and NVSwitch, which allows each GPU to communicate with any other GPU in the system at full bandwidth (900 GB/s GPU memory bandwidth) and enables ultra-fast GPU-to-GPU communication.

- High-speed networking. NVIDIA Quantum InfiniBand (or Ethernet) networking connects the DGX H100 system to the broader data center infrastructure, enabling high-speed communication between them that is critical for multi-node configurations and scaling, especially in supercomputing clusters.

- High-performance x86 CPUs. Typically from AMD (EPYC) or Intel, these coordinate with the GPUs to manage workloads, handle non-GPU-specific tasks, and control overall system operations.

- Memory and storage. High-bandwidth memory in each H100 GPU allows the system to handle large datasets. DGX H100 systems also come with a significant amount of system memory (RAM) and NVMe SSD storage for high-speed data access.

- Software stack. This includes NVIDIA AI Enterprise and NVIDIA Base Command for managing multiple DGX H100 systems, along with deep learning frameworks like PyTorch, TensorFlow, and MXNet, optimized for GPU acceleration and CUDA and cuDNN libraries that facilitate efficient GPU programming for AI workloads.

DGX SuperPOD with H100 reference architecture

The DGX SuperPOD with H100 systems is an architecture for large-scale infrastructure that extends beyond a single DGX H100 system to provide an entire AI-optimized data center solution. It is essentially a cluster of DGX H100 systems that work together in a coordinated manner for ultra-large AI workloads.

Key Features of the DGX SuperPOD with H100 reference architecture:

- Massive scalability. A DGX SuperPOD can scale from 20 DGX H100 systems up to over 500 nodes, each containing 8 H100 GPUs, resulting in a cluster with thousands of GPUs working in parallel. This enables extremely large-scale AI model training and inference, and the ability to process enormous datasets and compute requirements, such as for training LLMs like GPT-4, or handling sophisticated simulation tasks.

- High-speed interconnect. Quantum InfiniBand provides ultra-high-speed interconnects between nodes within the NVIDIA DGX SuperPOD H100 reference architecture and ensures low latency and high bandwidth communication between different DGX systems in the cluster. This network fabric supports AI workloads that must simultaneously share data across many GPUs in different systems.

- DGX H100 Pods. Each Pod in a SuperPOD is typically 4-8 DGX H100 systems in a rack connected via high-speed networking. Pod architecture allows easy expansion and modularity.

- Centralized management and orchestration. DGX SuperPOD with H100 use NVIDIA Base Command, a software suite for centralized management of multi-node clusters, job scheduling, data center monitoring, AI workload orchestration, and model deployment support at scale.

- Advanced cooling and power efficiency. DGX SuperPOD with H100 deployments feature efficient air or liquid cooling solutions and optimized power management based on their immense compute density.

- Distributed, high-performance storage. DGX SuperPOD with H100 reference architecture integrates systems like NVIDIA GPUDirect Storage, which minimize latency between storage and GPUs. This ensures that large datasets can be accessed quickly for training and inference.

DGX H100 and DGX SuperPOD H100 side by side:

- Scale. DGX H100 offers a single node (8 H100 GPUs), while DGX SuperPOD with H100 is a large-scale cluster of 20 or more nodes, and potentially thousands of GPUs.

- Target audience. While DGX SuperPOD with H100 are ideal for medium-sized AI workloads and enterprises, DGX SuperPOD with H100 are better suited for large enterprises, research institutions, and hyperscale AI model training.

- Storage. DGX H100 systems use local storage per system, while DGX SuperPOD with H100 use distributed storage for the entire cluster.

- Management. DGX H100 systems are managed individually or in small clusters, while NVIDIA Base Command is used to manage large DGX SuperPOD with H100 clusters centrally

DGX H100 Specifications and Core Components

NVIDIA DGX H100 specs and core components reflect its purpose of accelerating deep learning, machine learning, and HPC tasks at the enterprise and research levels

NVIDIA DGX H100 Specifications

- High-speed network interface with support for 400 Gbps networking, 8 x 400 Gbps Ethernet or InfiniBand (Quantum IB)

- 8 x NVIDIA H100 Tensor Core GPUs

- 80 GB HBM3 per GPU

- Dual AMD EPYC 9004 series

- Xeon Platinum 8480c CPUs

- 2 TB DDR5 system memory (RAM)

- 30 TB NVMe SSD

- NVLink and NVSwitch (900 GB/s GPU-to-GPU bandwidth) interconnect

- Dual 3,200W (N+N redundancy) power supply

- NVIDIA AI Enterprise, CUDA, cuDNN, NVIDIA Base Command software

DGX H100 vs A100

The DGX A100 vs H100 systems are both designed for AI workloads, but they are based on different architectures and represent different generations of NVIDIA infrastructure:

- Architecture. DGX H100 systems are supported by newer Hopper (H100) architecture, while the older Ampere (A100) architecture supports DGX A100 systems.

- GPU model. Both systems run 8 NVIDIA Tensor Core GPUs each, but they each run their own type: H100 GPUs for DGX H100 systems, and A100 GPUs for DGX A100 systems.

- GPU memory. DGX H100 offers 80 GB HBM3 per GPU compared to the 40 GB or 80 GB HBM2e per GPU from A100.

- Memory bandwidth. DGX H100 has 3 TB/s per GPU, vs the 2 TB/s per GPU delivered by A100.

- GPU interconnect. DGX H100 communicates with the 900 GB/s bandwidth NVLink 4.0 + NVSwitch, while the DGX A100 communicates with the 600 GB/s bandwidth NVLink 3.0 + NVSwitch.

- CPU. DGX A100 systems use 64 core AMD EPYC 7742 processors, while DGX H100 systems use dual Intel Xeon Platinum processors.

- System memory (RAM). The RAM difference between DGX H100 vs A100 is 2 TB DDR5 vs 1 TB DDR4.

- 2x faster networking speed. DGX H100 systems offer 8 x 400 Gbps InfiniBand vs the A100’s 8 x 200 Gbps InfiniBand. Both also offer Ethernet.

- 2x more storage. There is also a notable storage difference between DGX A100 vs H100 (15 TB NVMe SSD vs 30 TB NVMe SSD).

How Much Does DGX H100 Cost?

There are several factors to consider here, including outlay costs, licensing, and power consumption:

DGX H100 pricing for a single system ranges between $300,000 and $500,000, depending on its configuration and the costs of things like support, software licenses, and installation. Larger NVIDIA DGX SuperPODs can cost millions of dollars, depending on the scale and infrastructure.

NVIDIA DGX H100 Power Consumption

Power consumption is a significant piece of the operational NVIDIA DGX H100 price, especially for organizations with high-density AI workloads. The DGX H100 system features dual 3,200W power supplies, meaning the total power draw for the system can be as high as 6.4 kW at peak usage.

Real-world power consumption depends on the workload intensity and system utilization, but for AI training and high-performance tasks, it would typically operate close to its maximum power draw.

In action, annual DGX H100 power consumption running continuously at full load would consume: 6.4 kW×24 hours/day×365 days/year=56,064 kWh/year. Costs vary by region, but at $0.10 per kWh, the annual power cost would be approximately $5,610.24 per year, increasing to about $8,415.36 per year at $0.15 per kWh.

If a system runs at a lower level of utilization, the power consumption and costs will be proportionally lower. There are additional operating costs for DGX H100 systems, including for cooling infrastructure (air or liquid) since the system generates significant heat, which might add 10-20% to the total operational power cost.

Support and maintenance is an additional expense. NVIDIA typically offers support plans for DGX H100 systems, which can range from $10,000 to $50,000 per year depending on the level of service.

DGX H100 Use Cases

The NVIDIA DGX H100 is designed for cutting-edge AI infrastructure and high-performance computing (HPC) applications. Here are some examples of the primary use cases for the DGX H100:

- Natural language processing and deep learning recommendation models that power generative AI text, images, video, and music platforms

- Scientific research and simulation models in fields like climate science, astrophysics, chemistry, drug discovery, and genomics research

- AI models for autonomous driving, robotics, and drone navigation

- Healthcare applications such as medical imaging and personalized medicine

- Financial services applications such as fraud detection, algorithmic trading, and risk management

- Digital twins for use in manufacturing, urban planning, or automotive/aerospace applications

- Film and video editing and media production

- Real-time interactive gaming apps

- Behavioral data-driven advertising

- Large-scale AI inference for business innovations and optimizations such as virtual assistants and customer analysis for e-commerce platforms

- Threat detection and intrusion prevention in cybersecurity systems

Benefits of DGX H100

In addition to what has already been discussed, NVIDIA DGX H100 has several important features and considerations to note:

- Transformer Engine. DGX H100 is designed to optimize the transformer-based models widely used in generative AI, natural language processing, and large language models like GPT, BERT, and T5.

- FP8 precision. This new numerical format was introduced in the Hopper architecture and allows AI models to be trained and run at higher speeds with lower memory consumption and smaller data footprints compared to higher precision formats like FP16 or FP32.

- Multi-instance GPU (MIG) technology support. This allows each GPU to be partitioned into up to seven instances, each operating independently with its own memory, cache, and compute resources. MIG enables users to run multiple smaller AI tasks concurrently on the same GPU, achieving resource utilization and flexibility ideal for multi-tenant environments such as cloud providers or organizations needing to run diverse workloads simultaneously without dedicating entire GPUs to each task.

- Containerization and virtualization. NVIDIA offers NVIDIA GPU Cloud, a repository of pre-built AI models and containers optimized for H100 architecture, which allows for the rapid deployment of AI workloads with minimal setup time.

- Air or liquid-cooling. DGX H100 systems can be equipped with air- or liquid-cooled options, depending on the deployment environment. Liquid cooling is often preferred for dense installations like SuperPODs, as it helps manage heat more efficiently and reduces energy costs.

- Trusted Platform Module (TPM) security features. The DGX H100 system TPM 2.0 security module provides hardware-based security functions like encryption key management. Secure Boot executes only trusted software during the boot process, protecting the system from low-level malware and attacks.

WEKA and DGX H100

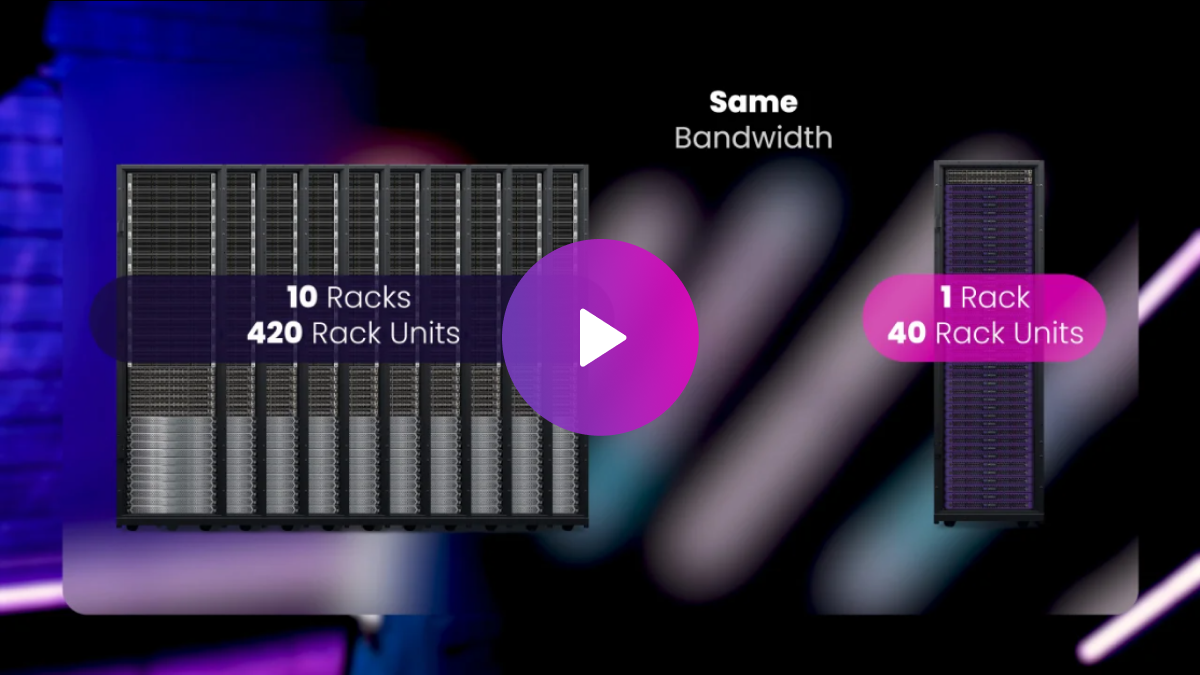

WEKA partners with NVIDIA to optimize AI workloads by combining the power of its WEKA® Data Platform with NVIDIA DGX SuperPOD™ systems. The WEKA Data Platform is designed to accelerate AI innovation through its modular, scale-out architecture, which efficiently transforms data storage silos into dynamic data pipelines. This integration enables seamless data management across on-premises, cloud, and hybrid environments.

Certified for NVIDIA DGX H100 Systems, WEKA’s platform ensures exceptional performance and scalability, delivering up to 10x the bandwidth and 6x more IOPS. With best-of-breed components like Intel Xeon processors and NVIDIA networking technologies, the WEKApod data appliance provides a turnkey solution that scales effortlessly to meet the demands of AI deployments, enhancing model training and deployment speed while optimizing infrastructure investments.