NVIDIA DGX SuperPOD: How it Works and More

What is NVIDIA DGX SuperPOD?

DGX SuperPOD is a scalable artificial intelligence (AI) infrastructure platform. NVIDIA DGX SuperPOD was designed to deliver agile performance for the most challenging high-performance computing (HPC) workloads. The DGX SuperPOD platform is optimized for training large-scale AI models, enabling enterprises and research institutions to accelerate AI initiatives.

How does DGX SuperPOD NVIDIA differ from the BasePOD version?

NVIDIA DGX SuperPOD and BasePOD differ primarily in scale and intended use. DGX SuperPOD is designed for large-scale AI deployments, offering extensive computational power through numerous DGX systems, advanced networking, and integrated software, making it ideal for enterprises handling massive AI workloads.

DGX BasePOD targets medium-scale deployments with a more manageable number of DGX systems and simpler networking, making it suitable for small to medium-sized businesses and departmental use. BasePOD’s moderate scale and performance provide a more flexible and cost-effective solution, enabling broader access to advanced AI capabilities without the complexity and resource requirements of SuperPOD.

NVIDIA SuperPOD is generally a much larger-scale deployment. For this reason, it can handle much more massive workloads and scale to thousands of DGX systems.

NVIDIA SuperPOD Architecture Explained

NVIDIA DGX SuperPOD reference architecture outlines the recommended configuration and design principles for building SuperPOD infrastructure. It describes the relevant NVIDIA DGX systems, and includes important information about networking, storage solutions, and other components.

DGX SuperPOD reference architecture ensures optimal performance, scalability, and reliability in several important ways:

- Scalable infrastructure design. The reference architecture defines scalable infrastructure that allows organizations to start with a smaller deployment and easily expand as their workload demands grow. It achieves scalability using a modular approach, in which users add resources such as DGX systems, networking components, and storage incrementally as needed.

- High-performance networking. DGX SuperPOD relies on high-speed networking technologies for low-latency, high-bandwidth communication; efficient data transfer and synchronization; and parallel processing of AI workloads across multiple nodes.

- Optimized storage solutions. Reference architecture includes recommended storage solutions optimized for AI workloads designed to ensure fast data access during training and tasks using different technologies depending on the requirements of the deployment.

- GPU-accelerated computing. GPUs are optimized for AI workloads drive DGX SuperPOD systems. They are optimized for AI workloads, and capable of parallel processing and high computational throughput.

- Management and orchestration. Reference architecture recommends tools that streamline the deployment, monitoring, and management of DGX SuperPOD infrastructure and AI supercomputing environment.

DGX SuperPOD Specs: Core Components

As a basic matter, NVIDIA DGX SuperPOD specs and core components include the DGX systems themselves, which are driven by GPUs, along with high-speed interconnects and optimized storage solutions. Here is a more detailed look:

- NVIDIA DGX systems. A DGXSuperPOD is typically composed of multiple purpose-built servers optimized for AI workloads. These are driven by NVIDIA A100 or H100 GPUs, which offer parallel processing capabilities and high computational throughput.

- GPU architecture. A SuperPOD deployment may utilize NVIDIA A100 GPUs based on Ampere architecture or NVIDIA H100 GPUs based on Hopper architecture. (See more on the difference below.)

- High-speed interconnects. SuperPOD deployments demand high-speed networking technologies such as NVIDIA Mellanox InfiniBand. These offer low-latency, high-bandwidth communication between nodes and enable efficient data transfer and distributed computing across multiple nodes.

- Storage solutions. Storage solutions optimized for SuperPOD deployments and their AI workloads include NVMe SSDs or high-capacity HDDs. These provide high throughput and low latency, ensuring fast access to data during training and inference tasks

The typical DGX SuperPOD price varies depending on the configuration and scale of the deployment.

DGX SuperPOD H100 vs A100

GPU architecture is the distinction between NVIDIA DGX SuperPOD with H100 Systems and DGX SuperPOD with A100 Systems. The DGX SuperPOD with H100 is based on next-generation Hopper architecture, while the NVIDIA DGX SuperPOD with A100 is based on older Ampere architecture.

DGX SuperPOD H100 reference architecture offers improved performance and efficiency compared to that of the A100, making it better suited for demanding AI workloads.

How many DGX nodes are in a SuperPOD?

A SuperPOD can consist of hundreds or even thousands of DGX nodes, depending on the specific deployment requirements and workload demands

DGX BasePOD Core Components

The compute, HCA, and switch resources form the foundation of the DGX BasePOD. Consider how NVIDIA DGX H100 systems are composed as an example:

The NVIDIA DGX H100 system is designed for compute density, high performance, and flexibility. Key specifications include eight NVIDIA H100 GPUs and 640GB GPU memory. Four single-port HCAs are used for the compute fabric, and dual-port HCAs provide parallel pathways to management and storage fabrics. The out-of-band port provides BMC access.

DGX NVIDIA configurations can be equipped with four types of networking switches:

NVIDIA QM9700 and QM8700 InfiniBand Switches offer compute power for fabrics in NDR BasePOD configurations. Each NVIDIA DGX system has dual connections to each of the InfiniBand switches, providing multiple low-latency, high-bandwidth paths between the systems. NVIDIA SN5600 switches are used for GPU-to-GPU fabrics, and NVIDIA SN4600 switches provide redundant connectivity for in-band management of the DGX BasePOD.

DGX SuperPOD Use Cases

NVIDIA DGX SuperPOD is ideally suited for high-performance computing and artificial intelligence development and deployment. Some use cases include:

- Deep learning and AI training. DGX SuperPOD is optimized to train large-scale deep learning models on massive datasets for use in natural language processing, image recognition, and speech recognition.

- AI supercomputing. NVIDIA SuperPOD runs AI algorithms and simulations used in scientific research, drug discovery, climate modeling, and financial analysis.

- Data analytics and insights. DGX SuperPOD extracts insights and patterns used in predictive analytics, customer segmentation, fraud detection, and personalized recommendations.

- High-performance computing (HPC). NVIDIA DGX SuperPOD can be used for traditional HPC workloads in computational fluid dynamics, finite element analysis, and molecular dynamics simulations.

- Genomics and healthcare. DGX SuperPOD accelerates genomic sequencing and analysis, enabling the identification of genetic variations, disease markers, and potential treatments.

- Autonomous vehicles and robotics. NVIDIA SuperPOD powers transportation and logistics algorithms for autonomous vehicles, drones, and robots.

- Natural language processing (NLP). DGX SuperPOD can train language models to understand and generate human-like text, enabling advanced NLP applications such as language translation, sentiment analysis, and chatbots

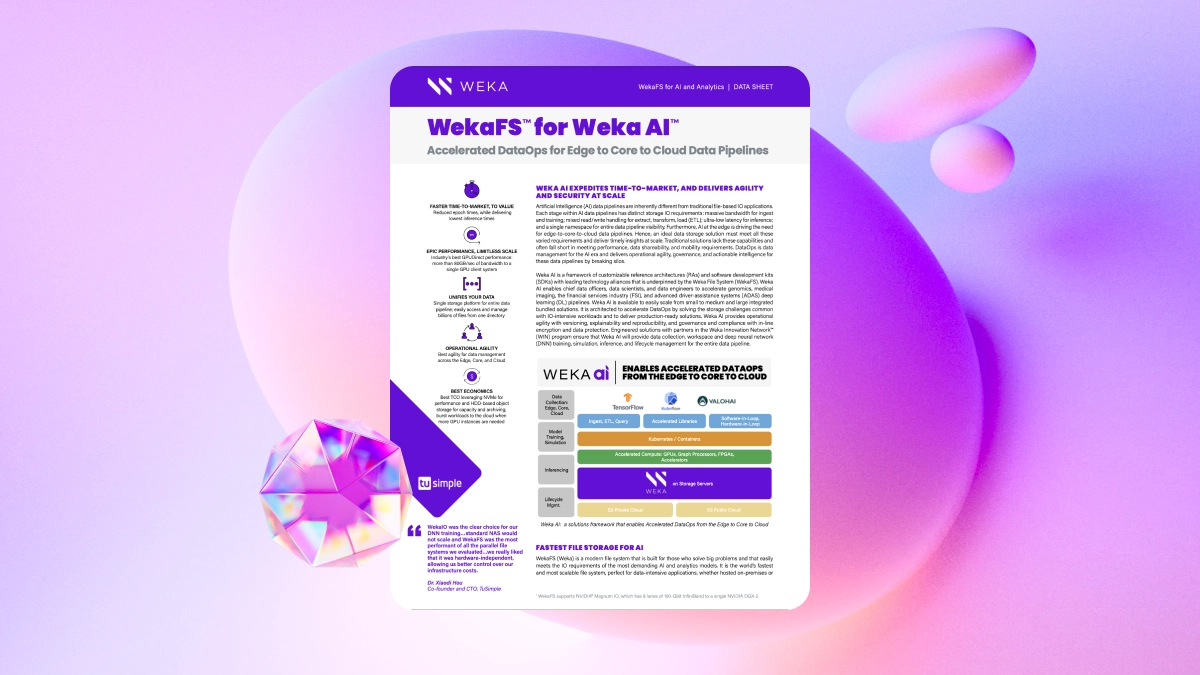

WEKA and DGX SuperPOD

NVIDIA SuperPOD certified storage solutions are tested and validated by NVIDIA for compatibility and performance with DGX SuperPOD deployments. NVIDIA SuperPOD storage solutions are optimized to provide high throughput and low latency for AI workloads, ensuring efficient data access and processing.

WEKA’s DGX SuperPOD storage solution (WEKApod) has been certified for the NVIDIA DGX SuperPOD with NVIDIA DGX H100 systems. It is engineered to create a seamless AI data management environment, integrating WEKA’s high-performance storage software with top-tier storage hardware.

WEKApod delivers up to 18,300,000 IOPS in its starting configuration, ensuring the compute nodes of the DGX SuperPOD remain fed with data, and no bottlenecks ensue. WEKApod drives 400 Gb/s network connections using NVIDIA ConnectX-7 network cards and InfiniBand to facilitate rapid data transfer between nodes.

WEKApod starts with a one-petabyte configuration with eight storage nodes, and can scale to meet demand up to hundreds of nodes. This ensures that enterprises can expand as their AI projects grow in complexity and size.

WEKA’s AI-native architecture is a crucial differentiator that sets it apart, and addresses the inherent inefficiencies of legacy storage systems. This recent SuperPOD certification, along with WEKA’s previously announced BasePOD certification, is based on the same software stack that WEKA delivers for all of its solutions. The bottom line is that WEKA’s storage software can meet even the most challenging demands of AI workloads without becoming a bottleneck.

Furthermore, WEKA also announced results from software tests on the SPECStorage 2020 benchmark suite. WEKA consistently ranked high for multiple benchmarks, indicating that it is capable of managing diverse IO profiles without tuning.

NVIDIA DGX SuperPOD is a deployment of 64 DGX systems designed to create and scale AI supercomputing infrastructure for highly complex AI challenges. Typical use cases include robotics, speech-to-vision applications, and autonomous systems that demand more rapid insights from data. WEKA is certified for DGX SuperPOD, and meets the requirements for workloads that demand their “best” performance level.